Kubernetes for different stages of your projects ¶

Goal ¶

When starting a project using Kubernetes, usually a lot of testing is done.

Also, as a startup, the project is trying to save costs. (since probably no clients, or just a few, are now using the product)

To achieve this, we suggest the following path:

- Step 0 - develop in a K3s running on an EC2

- Step 1 - starting stress testing or having the first clients, go for KOPS

- Step 2 - when HA, escalation and easy of management is needed, consider going to EKS

For a lot of projects, Step 1 is ok for running!

Following we'll explore the three options.

Assumptions ¶

We are assuming the binbash Leverage Landing Zone is deployed, an account called apps-devstg was created and a region us-east-1 is being used. In any case you can adapt these examples to other scenarios.

K3s ¶

Goal ¶

A cluster with one node (master/worker) is deployed here.

Cluster autoscaler can be used with K3s to scale nodes, but it requires a lot of work that justifies going to KOPS.

[TBD]

KOPS ¶

See also here.

Goal ¶

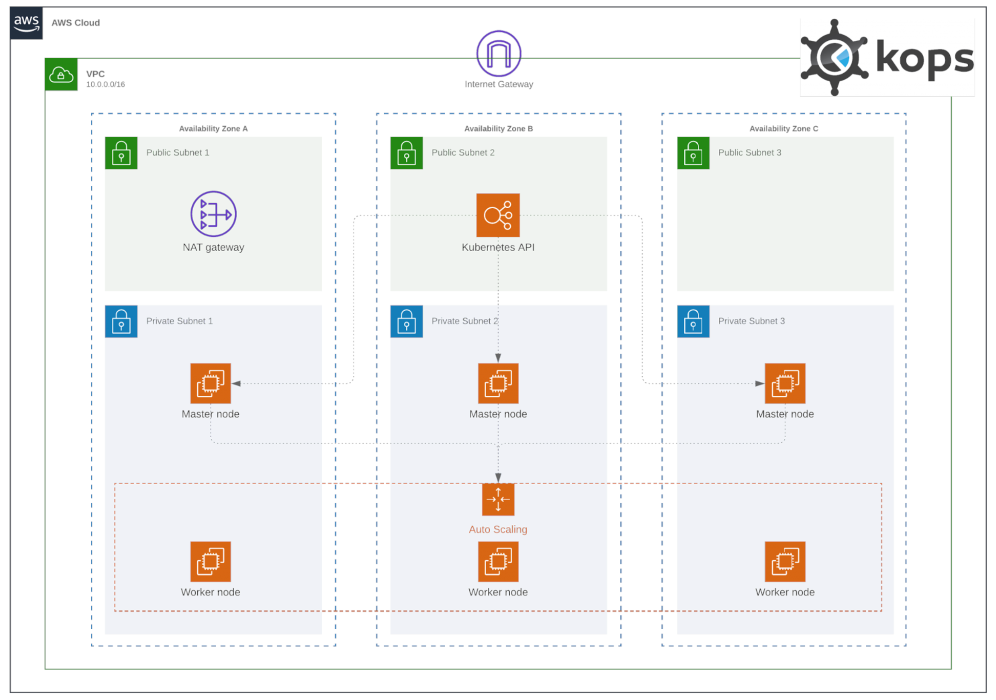

A gossip-cluster (not exposed to Internet cluster, an Internet exposed cluster can be created using Route53) with a master node and a worker node (with node autoscaling capabilities) will be deployed here.

More master nodes can be deployed. (i.e. one per AZ, actually three are recommended for production grade clusters)

It will be something similar to what is stated here, but with one master, one worker, and the LB for the API in the private network.

Procedure ¶

These are the steps:

- 0 - copy the KOPS layer to your binbash Leverage project.

- paste the layer under the

apps-devstg/us-east-1account/region directory - for easy of use, the

k8s-kops --can be renamed tok8s-kops

- paste the layer under the

- 1 - apply prerequisites

- 2 - apply the cluster

- 3 - apply the extras

Ok, take it easy, now the steps explained.

0 - Copy the layer ¶

A few methods can be used to download the KOPS layer directory into the binbash Leverage project.

E.g. this addon is a nice way to do it.

Paste this layer into the account/region chosen to host this, e.g. apps-devstg/us-east-1/, so the final layer is apps-devstg/us-east-1/k8s-kops/.

Warning

Do not change the 1-prerequisites, 2-kops, 3-extras dir names since scripts depend on these!

1 - Prerequisites ¶

To create the KOPS cluster these are the requisites:

- a VPC to the cluster to live in

- a nat gateway to gain access to the Internet

- a bucket to store kops state

- an SSH key (you have to create it, )

Warning

If the nat-gateway is not in place check how to enable it using the binbash Leverage network layer here.

Warning

If you will activate Karpenter you need to tag the target subnets (i.e. the private subnets in your VPC) with:

"kops.k8s.io/instance-group/nodes" = "true"

"kubernetes.io/cluster/<cluster-name>" = "true"

We are assuming here the worker Instance Group is called

nodes. If you change the name or have more than one Instance Group you need to adapt the first tag.

Info

Note a DNS is not needed since this will be a gossip cluster.

Info

A new bucket is created so KOPS can store the state there

By default, the account base network is used. If you want to change this check/modify this resource in config.tf file:

data "terraform_remote_state" "vpc" {

Also, shared VPC will be used to allow income traffic from there. This is because in the binbash Leverage Landing Zone defaults, the VPN server will be created there.

cd into the 1-prerequisites directory.

Open the locals.tf file.

Here these items can be updated:

- versions

- machine types (and max, min qty for masters and workers autoscaling groups)

- the number of AZs that will be used for master nodes.

Open the config.tf file.

Here set the backend key if needed:

backend "s3" {

key = "apps-devstg/us-east-1/k8s-kops/prerequisites/terraform.tfstate"

}

Info

Remember binbash Leverage has its rules for this, the key name should match <account-name>/[<region>/]<layer-name>/<sublayer-name>/terraform.tfstate.

Init and apply as usual:

leverage tf init

leverage tf apply

Warning

You will be prompted to enter the ssh_pub_key_path. Here enter the full path (e.g. /home/user/.ssh/thekey.pub) for your public SSH key and hit enter.

A key managed by KMS can be used here. A regular key-in-a-file is used for this example, but you can change it as per your needs.

Info

Note if for some reason the nat-gateway changes, this layer has to be applied again.

Info

Note the role AWSReservedSSO_DevOps (the one created in the SSO for Devops) is added as system:masters. If you want to change the role, check the devopsrole in data.tf file.

2 - Apply the cluster with KOPS ¶

cd into the 2-kops directory.

Open the config.tf file and edit the backend key if needed:

backend "s3" {

key = "apps-devstg/us-east-1/k8s-kops/terraform.tfstate"

}

Info

Remember binbash Leverage has its rules for this, the key name should match <account-name>/[<region>/]<layer-name>/<sublayer-name>/terraform.tfstate.

Info

If you want to check the configuration:

make cluster-template

The final template in file cluster.yaml.

If you are happy with the config (or you are not happy but you think the file is ok), let's create the OpenTofu files!

make cluster-update

Finally, apply the layer:

leverage tf init

leverage tf apply

Cluster can be checked with this command:

make kops-cmd KOPS_CMD="validate cluster"

Accessing the cluster ¶

Here there are two questions.

One is how to expose the cluster so Apps running in it can be reached.

The other one is how to access the cluster's API.

For the first one:

since this is a `gossip-cluster` and as per the KOPS docs: When using gossip mode, you have to expose the kubernetes API using a loadbalancer. Since there is no hosted zone for gossip-based clusters, you simply use the load balancer address directly. The user experience is identical to standard clusters. kOps will add the ELB DNS name to the kops-generated kubernetes configuration.

So, we need to create a LB with public access.

For the second one, we need to access the VPN (we have set the access to the used network previously), and hit the LB. With the cluster, a Load Balancer was deployed so you can reach the K8s API.

Access the API ¶

Run:

make kops-kubeconfig

A file named as the cluster is created with the kubeconfig content (admin user, so keep it safe). So export it and use it!

export KUBECONFIG=$(pwd)/clustername.k8s.local

kubectl get ns

Warning

You have to be connected to the VPN to reach your cluster!

Access Apps ¶

You should use some sort of ingress controller (e.g. Traefik, Nginx) and set ingresses for the apps. (see Extras)

3 - Extras ¶

Copy the KUBECONFIG file to the 3-extras directory, e.g.:

cp ${KUBECONFIG} ../3-extras/

cd into 3-extras.

Set the name for this file and the context in the file config.tf.

Set what extras you want to install (e.g. traefik = true) and run the layer as usual:

leverage tf init

leverage tf apply

You are done!

EKS ¶

See also here.

Goal ¶

A cluster with one node (worker) per AZ and the control plane managed by AWS is deployed here.

Cluster autoscaler is used to create more nodes.

Procedure ¶

These are the steps:

- 0 - copy the K8s EKS layer to your binbash Leverage project.

- paste the layer under the

apps-devstg/us-east-1account/region directory

- paste the layer under the

- 1 - create the network

- 2 - Add path to the VPN Server

- 3 - create the cluster and dependencies/components

- 4 - access the cluster

0 - Copy the layer ¶

A few methods can be used to download the K8s EKS layer directory into the binbash Leverage project.

E.g. this addon is a nice way to do it.

Paste this layer into the account/region chosen to host this, e.g. apps-devstg/us-east-1/, so the final layer is apps-devstg/us-east-1/k8s-eks/. Note you can change the layer name (and CIDRs and cluster name) if you already have an EKS cluster in this Account/Region.

1 - Create the network ¶

First go into the network layer (e.g. apps-devstg/us-east-1/k8s-eks/network) and config the OpenTofu S3 background key, CIDR for the network, names, etc.

cd apps-devstg/us-east-1/k8s-eks/network

Then, from inside the layer run:

leverage tf init

leverage tf apply

2 - Add the path to the VPN server ¶

Since we are working on a private subnet (as per the binbash Leverage and the AWS Well Architected Framework best practices), we need to set the VPN routes up.

If you are using the Pritunl VPN server (as per the binbash Leverage recommendations), add the route to the CIDR set in the step 1 to the server you are using to connect to the VPN.

Then, connect to the VPN to access the private space.

3 - Create the cluster ¶

First go into each layer and config the OpenTofu S3 background key, names, addons, the components to install, etc.

cd apps-devstg/us-east-1/k8s-eks/

Then apply layers as follow:

leverage tf init --layers cluster,identities,addons,k8s-components

leverage tf apply --layers cluster,identities,addons,k8s-components

4 - Access the cluster ¶

Go into the cluster layer:

cd apps-devstg/us-east-1/k8s-eks/cluster

Use the embedded kubectl to config the context:

leverage kubectl configure

Info

You can export the context to use it with stand alone kubectl.

Once this process is done, you'll end up with temporary credentials created for kubectl.

Now you can try a kubectl command, e.g.:

leverage kubectl get ns

Info

If you've followed the binbash Leverage recommendations, your cluster will live on a private subnet, so you need to connect to the VPN in order to access the K8s API.